Researchers have uncovered gaps in Amazon’s skill vetting process for the Alexa voice assistant ecosystem that could allow a malicious actor to publish a deceptive skill under any arbitrary developer name and even make backend code changes after approval to trick users into giving up sensitive information.

The findings were presented on Wednesday at the Network and Distributed System Security Symposium (NDSS) conference by a group of academics from Ruhr-Universität Bochum and the North Carolina State University, who analyzed 90,194 skills available in seven countries, including the US, the UK, Australia, Canada, Germany, Japan, and France.

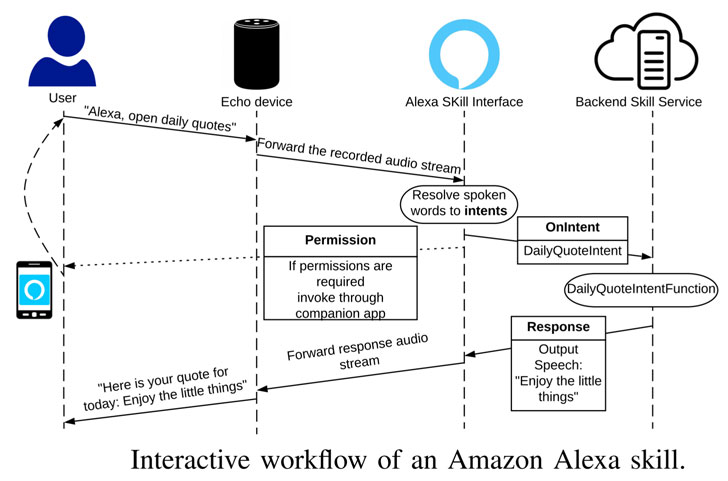

Amazon Alexa allows third-party developers to create additional functionality for devices such as Echo smart speakers by configuring “skills” that run on top of the voice assistant, thereby making it easy for users to initiate a conversation with the skill and complete a specific task.

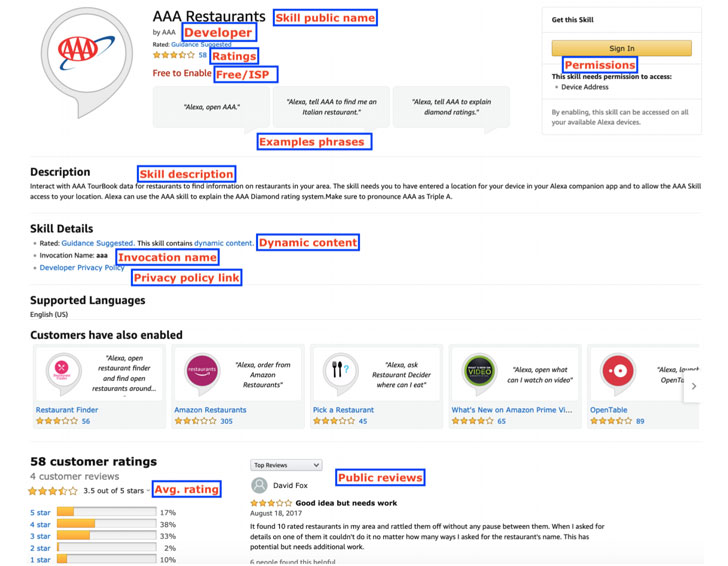

Chief among the findings is the concern that a user can activate a wrong skill, which can have severe consequences if the skill that’s triggered is designed with insidious intent.

The pitfall stems from the fact that multiple skills can have the same invocation phrase.

Indeed, the practice is so prevalent that investigation spotted 9,948 skills that share the same invocation name with at least one other skill in the US store alone. Across all the seven skill stores, only 36,055 skills had a unique invocation name.

Given that the actual criteria Amazon uses to auto-enable a specific skill among several skills with the same invocation names remain unknown, the researchers cautioned it’s possible to activate the wrong skill and that an adversary can get away with publishing skills using well-known company names.

“This primarily happens because Amazon currently does not employ any automated approach to detect infringements for the use of third-party trademarks, and depends on manual vetting to catch such malevolent attempts which are prone to human error,” the researchers explained. “As a result users might become exposed to phishing attacks launched by an attacker.”

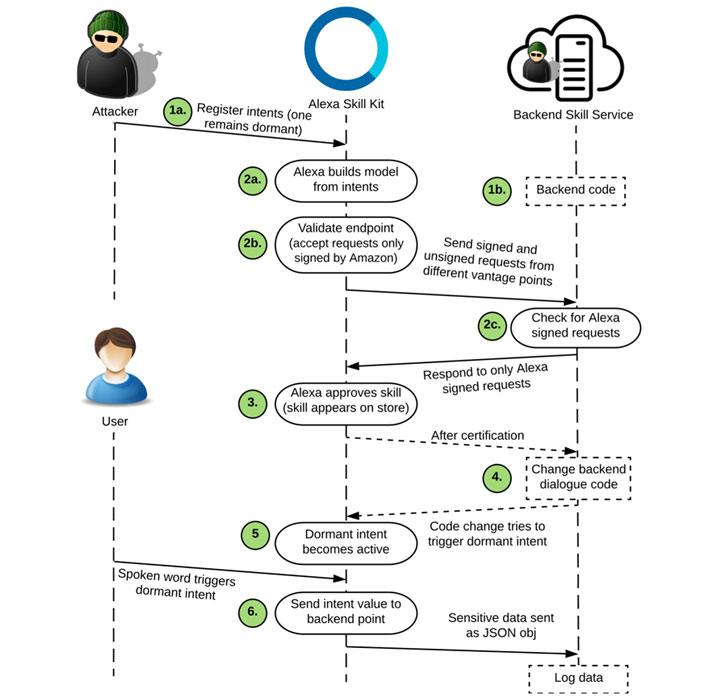

Even worse, an attacker can make code changes following a skill’s approval to coax a user into revealing sensitive information like phone numbers and addresses by triggering a dormant intent.

In a way, this is analogous to a technique called versioning that’s used to bypass verification defences. Versioning refers to submitting a benign version of an app to the Android or iOS app store to build trust among users, only to replace the codebase over time with additional malicious functionality through updates at a later date.

To test this out, the researchers built a trip planner skill that allows a user to create a trip itinerary that was subsequently tweaked after initial vetting to “inquire the user for his/her phone number so that the skill could directly text (SMS) the trip itinerary,” thus deceiving the individual into revealing his (or her) personal information.

Furthermore, the study found that the permission model Amazon uses to protect sensitive Alexa data can be circumvented. This means that an attacker can directly request data (e.g., phone numbers, Amazon Pay details, etc.) from the user that are originally designed to be cordoned by permission APIs.

The idea is that while skills requesting for sensitive data must invoke the permission APIs, it doesn’t stop a rogue developer from asking for that information straight from the user.

The researchers said they identified 358 such skills capable of requesting information that should be ideally secured by the API.

Lastly, in an analysis of privacy policies across different categories, it was found that only 24.2% of all skills provide a privacy policy link, and that around 23.3% of such skills do not fully disclose the data types associated with the permissions requested.

Noting that Amazon does not mandate a privacy policy for skills targeting children under the age of 13, the study raised concerns about the lack of widely available privacy policies in the “kids” and “health and fitness” categories.

“As privacy advocates we feel both ‘kid’ and ‘health’ related skills should be held to higher standards with respect to data privacy,” the researchers said, while urging Amazon to validate developers and perform recurring backend checks to mitigate such risks.

“While such applications ease users’ interaction with smart devices and bolster a number of additional services, they also raise security and privacy concerns due to the personal setting they operate in,” they added.