Apple on Thursday said it’s introducing new child safety features in iOS, iPadOS, watchOS, and macOS as part of its efforts to limit the spread of Child Sexual Abuse Material (CSAM) in the U.S.

To that effect, the iPhone maker said it intends to begin client-side scanning of images shared via every Apple device for known child abuse content as they are being uploaded into iCloud Photos, in addition to leveraging on-device machine learning to vet all iMessage images sent or received by minor accounts (aged under 13) to warn parents of sexually explicit photos in the messaging platform.

Furthermore, Apple also plans to update Siri and Search to stage an intervention when users try to perform searches for CSAM-related topics, alerting the “interest in this topic is harmful and problematic.”

“Messages uses on-device machine learning to analyze image attachments and determine if a photo is sexually explicit,” Apple noted. “The feature is designed so that Apple does not get access to the messages.” The feature, called Communication Safety, is said to be an opt-in setting that must be enabled by parents through the Family Sharing feature.

How Child Sexual Abuse Material is Detected

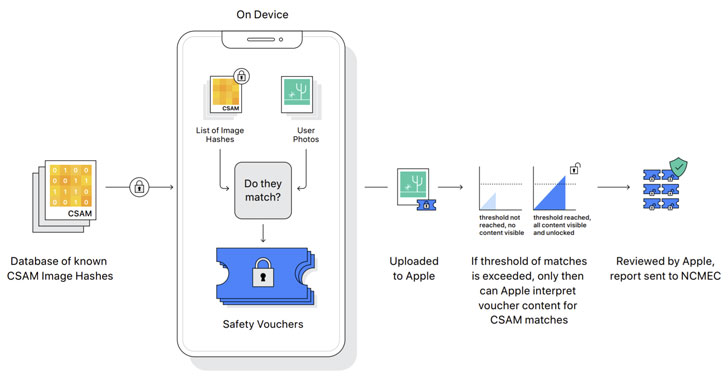

Detection of known CSAM images involves carrying out on-device matching using a database of known CSAM image hashes provided by the National Center for Missing and Exploited Children (NCMEC) and other child safety organizations before the photos are uploaded to the cloud. “NeuralHash,” as the system is called, is powered by a cryptographic technology known as private set intersection. However, it’s worth noting that while the scanning happens automatically, the feature only works when iCloud photo sharing is turned on.

What’s more, Apple is expected to use another cryptographic principle called threshold secret sharing that allows it to “interpret” the contents if an iCloud Photos account crosses a threshold of known child abuse imagery, following which the content is manually reviewed to confirm there is a match, and if so, disable the user’s account, report the material to NCMEC, and pass it on to law enforcement.

Researchers Express Concern About Privacy

Apple’s CSAM initiative has prompted security researchers to express anxieties that it could suffer from a mission creep and be expanded to detect other kinds of content that could have political and safety implications, or even frame innocent individuals by sending them harmless but malicious images designed to appear as matches for child porn.

U.S. whistle-blower Edward Snowden tweeted that, despite the project’s good intentions, what Apple is rolling out is “mass surveillance,” while Johns Hopkins University cryptography professor and security expert Matthew Green said, “the problem is that encryption is a powerful tool that provides privacy, and you can’t really have strong privacy while also surveilling every image anyone sends.”

Apple already checks iCloud files and images sent over email against known child abuse imagery, as do tech giants like Google, Twitter, Microsoft, Facebook, and Dropbox, who employ similar image hashing methods to look for and flag potential abuse material, but Apple’s attempt to walk a privacy tightrope could renew debates about weakening encryption, escalating a long-running tug of war over privacy and policing in the digital age.

The New York Times, in a 2019 investigation, revealed that a record 45 million online photos and videos of children being sexually abused were reported in 2018, out of which Facebook Messenger accounted for nearly two-thirds, with Facebook as a whole responsible for 90% of the reports.

Apple, along with Facebook-owned WhatsApp, have continually resisted efforts to intentionally weaken encryption and backdoor their systems. That said, Reuters reported last year that the company abandoned plans to encrypt users’ full backups to iCloud in 2018 after the U.S. Federal Bureau of Investigation (FBI) raised concerns that doing so would impede investigations.

“Child exploitation is a serious problem, and Apple isn’t the first tech company to bend its privacy-protective stance in an attempt to combat it. But that choice will come at a high price for overall user privacy,” the Electronic Frontier Foundation (EFF) said in a statement, noting that Apple’s move could break encryption protections and open the door for broader abuses.

“All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children’s, but anyone’s accounts. That’s not a slippery slope; that’s a fully built system just waiting for external pressure to make the slightest change,” it added.

The CSAM efforts are set to roll out in the U.S. in the coming months as part of iOS 15 and macOS Monterey, but it remains to be seen if, or when, it would be available internationally. In December 2020, Facebook was forced to switch off some of its child abuse detection tools in Europe in response to recent changes to the European commission’s e-privacy directive that effectively ban automated systems scanning for child sexual abuse images and other illegal content without users’ explicit consent.