The revolutionary technology of GenAI tools, such as ChatGPT, has brought significant risks to organizations’ sensitive data. But what do we really know about this risk? A new research by Browser Security company LayerX sheds light on the scope and nature of these risks. The report titled “Revealing the True GenAI Data Exposure Risk” provides crucial insights for data protection stakeholders and empowers them to take proactive measures.

The Numbers Behind the ChatGPT Risk

By analyzing the usage of ChatGPT and other generative AI apps among 10,000 employees, the report has identified key areas of concern. One alarming finding reveals that 6% of employees have pasted sensitive data into GenAI, with 4% engaging in this risky behavior on a weekly basis. This recurring action poses a severe threat of data exfiltration for organizations.

The report addresses vital risk assessment questions, including the actual scope of GenAI usage across enterprise workforces, the relative share of “paste” actions within this usage, the number of employees pasting sensitive data into GenAI and their frequency, the departments utilizing GenAI the most, and the types of sensitive data most likely to be exposed through pasting.

Usage and Data Exposure are on the Rise

One striking discovery is a 44% increase in GenAI usage over the last three months alone. Despite this growth, only an average of 19% of an organization’s employees currently utilize GenAI tools. However, the risks associated with GenAI usage remain significant, even at its current level of adoption.

The research also highlights the prevalence of sensitive data exposure. Of the employees using GenAI, 15% have engaged in pasting data, with 4% doing so weekly and 0.7% multiple times a week. This recurring behavior underscores the urgent need for robust data protection measures to prevent data leakage.

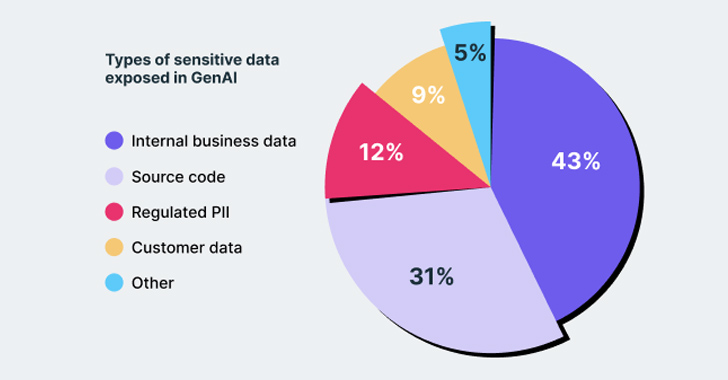

Source code, internal business information, and Personal Identifiable Information (PII) are the leading types of pasted sensitive data. This data was mostly pasted by users from the R&D, Sales & Marketing, and Finance departments.

How to Leverage the Report in Your Organization

Data protection stakeholders can leverage the insights provided by the report to build effective GenAI data protection plans. In the GenAI era, it is crucial to assess the visibility of GenAI usage patterns within an organization and ensure that existing products can provide the necessary insights and protection. Otherwise, stakeholders should consider adopting a solution that offers continuous monitoring, risk analysis, and real-time governance on every event within a browsing session.